Breadcrumb

- Home

- AI and Writing

- The Writing Center and AI

The Writing Center and AI

The AI Problem

Over a year ago, we asked our TAs how they felt about incorporating generative artificial intelligence tools (GAITs) like ChatGPT and CoPilot into sessions with students as many writing centers had started to do. We also asked them to describe when and in what ways GAITs might be used effectively to teach writing. Some responded that GAITs should never be used by students because of the risk of short-circuiting the learning processes. But several thought it might be acceptable for students to use AI to check and correct grammar. Some proposed using GAITs for brainstorming and finding sources. A few suggested AI could help students generate an outline, or test an argument, or to refine a thesis statement, or to develop topic sentences. None of our TAs thought it was acceptable for a student to use AI tools to draft passages of text or full papers, but when we mapped their responses onto the writing process – traditionally defined as a series of stages from brainstorming to proofreading – there was nothing left. There was, in other words, NO consensus on when, or at which point of the writing process, GAITs might be useful in teaching students how to write.

Moreover, our TAs’ sense of how GAITs could be incorporated into writing instruction was very fuzzy. They had all taken a three-semester hour course on writing pedagogy (and therefore had more training than most faculty) but their ideas primarily involved using AI to generate text – descriptions of topics, or outlines, or thesis statements, or alternative wording – and then asking students to evaluate and revise what it produced. But GAITs produce text that is so sophisticated that analyzing and revising it requires advanced critical thinking and writing skills that take years to develop. It requires, in other words, a level of expertise that most undergraduate students are only beginning to develop.

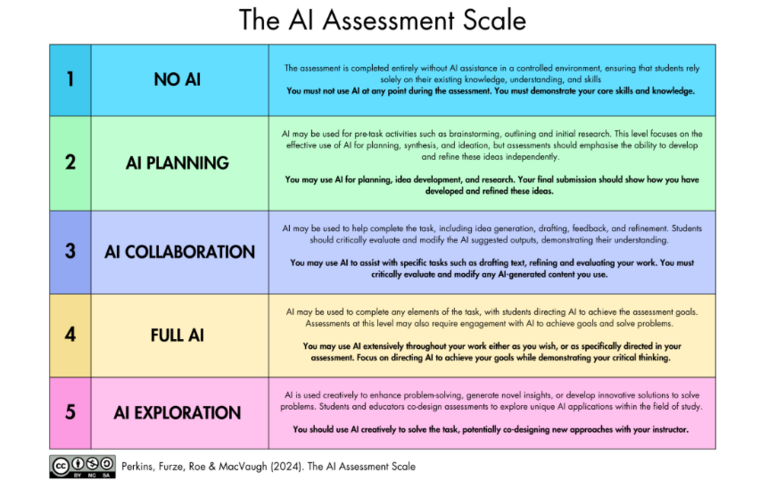

This problem – a lack of clarity on what pedagogically-sound uses of GAITs actually look like – has only become worse as these tools have become even more powerful and colleges and universities race to embrace them. Take for example this AI Assessment Scale, marketed to instructors as a “nuanced framework" for integrating AI into educational assessment and instruction.

While level 2 of the scale, AI Planning, might, at first glance, appear to be similar to how students use Google, the ability of AI to actually do the “planning, synthesis, and ideation” for the student makes it profoundly different. Asking questions, synthesizing different kinds of ideas, developing arguments, and analyzing evidence - these are all cognitively challenging tasks that help students develop strong thinking skills. They are tasks that literally build the student's brain by creating new circuits and strengthening existing neural networks. Outsourcing these tasks to AI short circuits this brain-building process. Level 3, AI Collaboration, incorporates AI at every stage of the writing process, from “idea generation” to “refinement,” and the admonishment to ensure that students “critically evaluate and modify the AI suggested outputs” ignores the question of whether or not students have the ability to do this effectively. Nor are there any examples of what “collaborating” with AI should look like in practice when you're trying to help a student build their own writing skills rather than just showing them how to use GAITs to produce writing for them.

This is the problem that has driven the development of a Writing Center AI policy, a policy that centers learning, rather than AI competency. We don’t ban AI, but (in addition to asking to see any relevant course AI policies) our TAs are expected to follow these guidelines when working with students:

- Any use of GAIT in a teaching session should center evidence-based pedagogical practices designed to strengthening the student’s writing and thinking skills (see here for examples).

- The end goal should always be to scaffold students to a place where they can deploy the desired skills themselves.

- Unless an assignment requires a different tool, TAs should direct students to the Writing Center’s WriteStrong CoPilot agent (bot) which has been designed with constraints that prevent it from producing text that a student can import into their work.